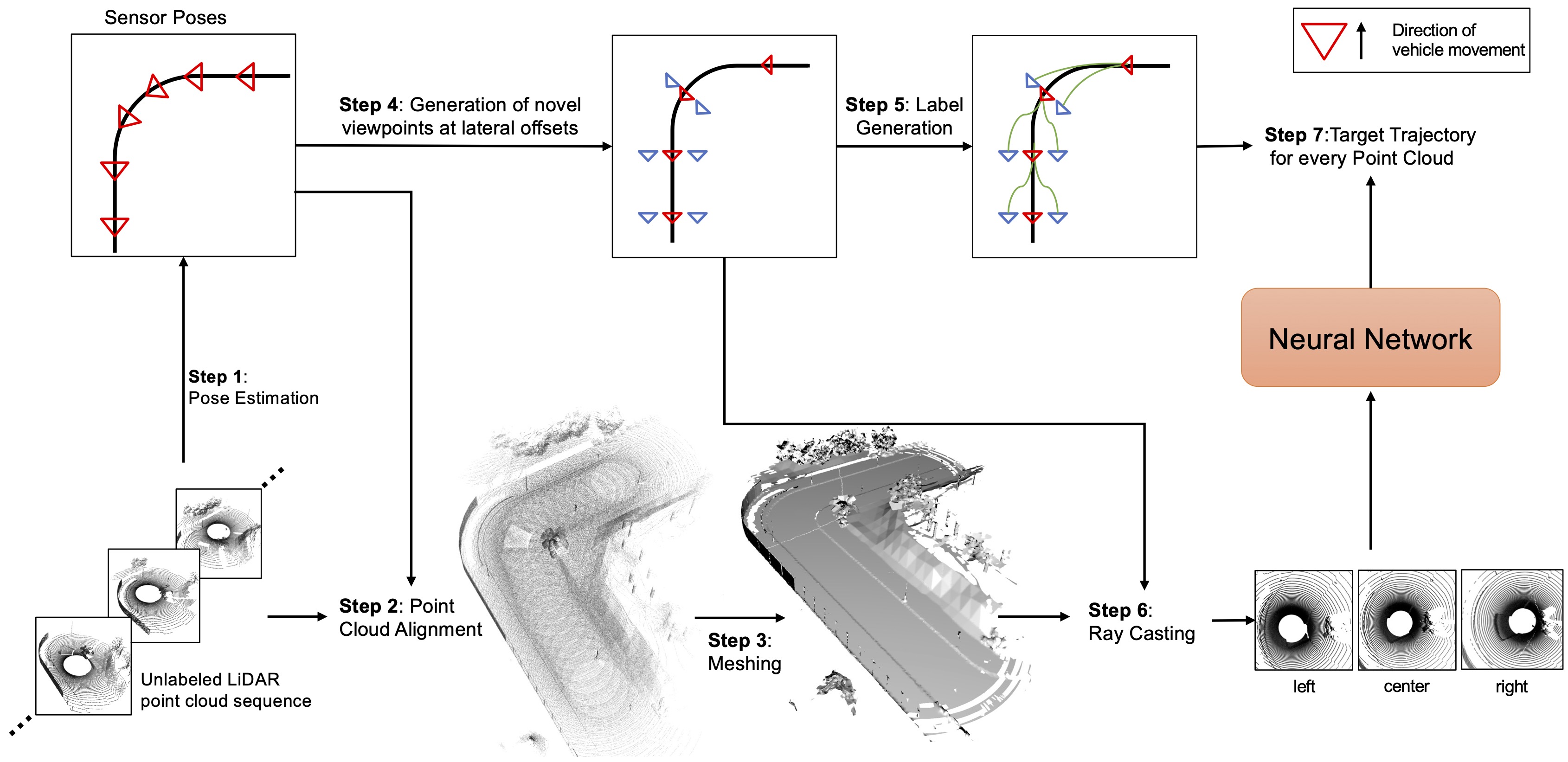

Deep learning models for self-driving cars require a diverse training dataset to manage critical driving scenarios on public roads safely. This includes having data from divergent trajectories, such as the oncoming traffic lane or sidewalks. Such data would be too dangerous to collect in the real world. Data augmentation approaches have been proposed to tackle this issue using RGB images. However, solutions based on LiDAR sensors are scarce. Therefore, we propose synthesizing additional LiDAR point clouds from novel viewpoints without physically driving at dangerous positions. The LiDAR view synthesis is done using mesh reconstruction and ray casting. We train a deep learning model, which takes a LiDAR scan as input and predicts the future trajectory as output. A waypoint controller is then applied to this predicted trajectory to determine the throttle and steering labels of the ego-vehicle. Our method neither requires expert driving labels for the original nor the synthesized LiDAR sequence. Instead, we infer labels from LiDAR odometry. We demonstrate the effectiveness of our approach in a comprehensive online evaluation and with a comparison to concurrent work. Our results show the importance of synthesizing additional LiDAR point clouds, particularly in terms of model robustness.

We synthesize LiDAR-like point clouds from novel viewpoints, lateral to the original trajectory. The animations below depict the importance of aligning the point clouds.

Below is an interactive viewer for an example, where the synthesized LiDAR scan lies too far off the reference trajectory. This leads to an unrealistic scenario wherein the car drives straight through the barrier. (The green denotes the road, the blue are the sidewalk and red points indicate barriers / fences)

@inproceedings{schmidt2023lidar,

title = {LiDAR View Synthesis for Robust Vehicle Navigation Without Expert Labels},

booktitle = {IEEE 26th International Conference on Intelligent Transportation Systems},

author = {Schmidt, Jonathan and Khan, Qadeer and Cremers, Daniel},

year = {2023},

}