Technical University of Munich

Technical University of Munich

Technical University of Munich

NeurIPS 2025

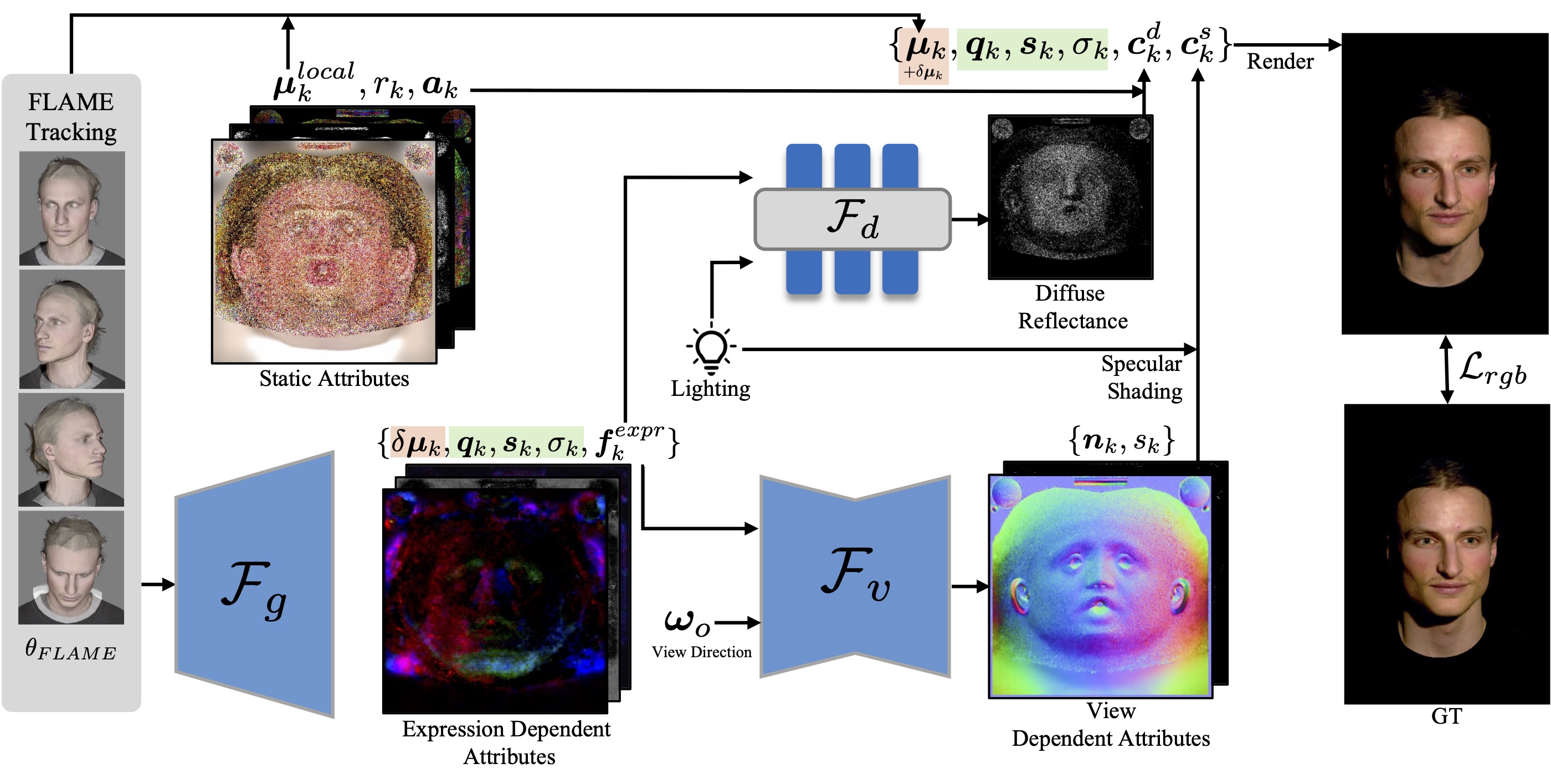

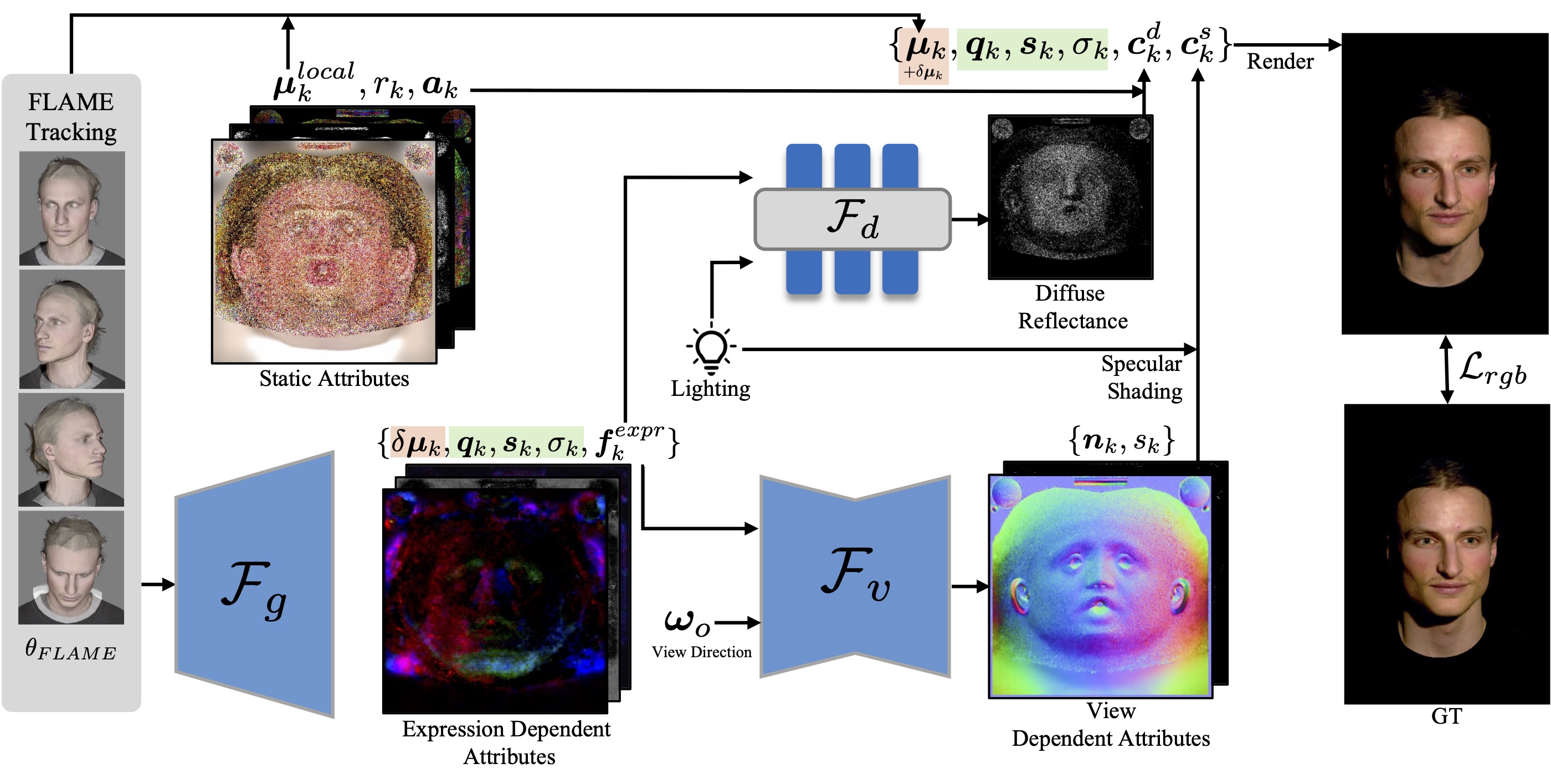

We introduce BecomingLit, a novel method for reconstructing relightable, high-resolution head avatars that can be rendered from novel viewpoints at interactive rates. Therefore, we propose a new low-cost light stage capture setup, tailored specifically towards capturing faces. Using this setup, we collect a novel dataset consisting of diverse multi-view sequences of numerous subjects under varying illumination conditions and facial expressions. By leveraging our new dataset, we introduce a new relightable avatar representation based on 3D Gaussian primitives that we animate with a parametric head model and an expression-dependent dynamics module. We propose a new hybrid neural shading approach, combining a neural diffuse BRDF with an analytical specular term. Our method reconstructs disentangled materials from our dynamic light stage recordings and enables all-frequency relighting of our avatars with both point lights and environment maps. In addition, our avatars can easily be animated and controlled from monocular videos. We validate our approach in extensive experiments on our dataset, where we consistently outperform existing state-of-the-art methods in relighting and reenactment by a significant margin.

BecomingLit represents head avatars with 3D Gaussian primitives animated with FLAME parameters. The appearance is represented with a hybrid neural shading model, where the diffuse light transport is estimated with a neural network and specular reflectance is modeled with a Cook-Torrance BRDF, enableling all-frequency relighting with point lights and environment maps.

@inproceedings{ schmidt2025becominglit, title={BecomingLit: Relightable Gaussian Avatars with Hybrid Neural Shading}, author={Jonathan Schmidt and Simon Giebenhain and Matthias Niessner}, booktitle={The Thirty-ninth Annual Conference on Neural Information Processing Systems}, year={2025}, }